Though the invisible, the path for them exists. And we are all the same.

This project aims to create an environment where visually impaired individuals can freely enjoy travel and leisure without external assistance, helping them experience the joy of travel on equal terms with sighted individuals through auditory, tactile, and real time descriptive cues.

Furthermore, this project was developed as an entry for an assistive device design competition (2025 Assistive Technology Idea Contest), going beyond a conceptual idea to be refined into a practical and scalable service.

SILENT WAY

Deliverables

User Research

UX Flow

UI, GUI

Prototypes

Design Guidelines

VIDEO

Storyboarding

Video production

Editing

Role

UX Designer

Team

Solo project

Timeline

Sep 2025

Journey in Action

Flexible Navigation Modes

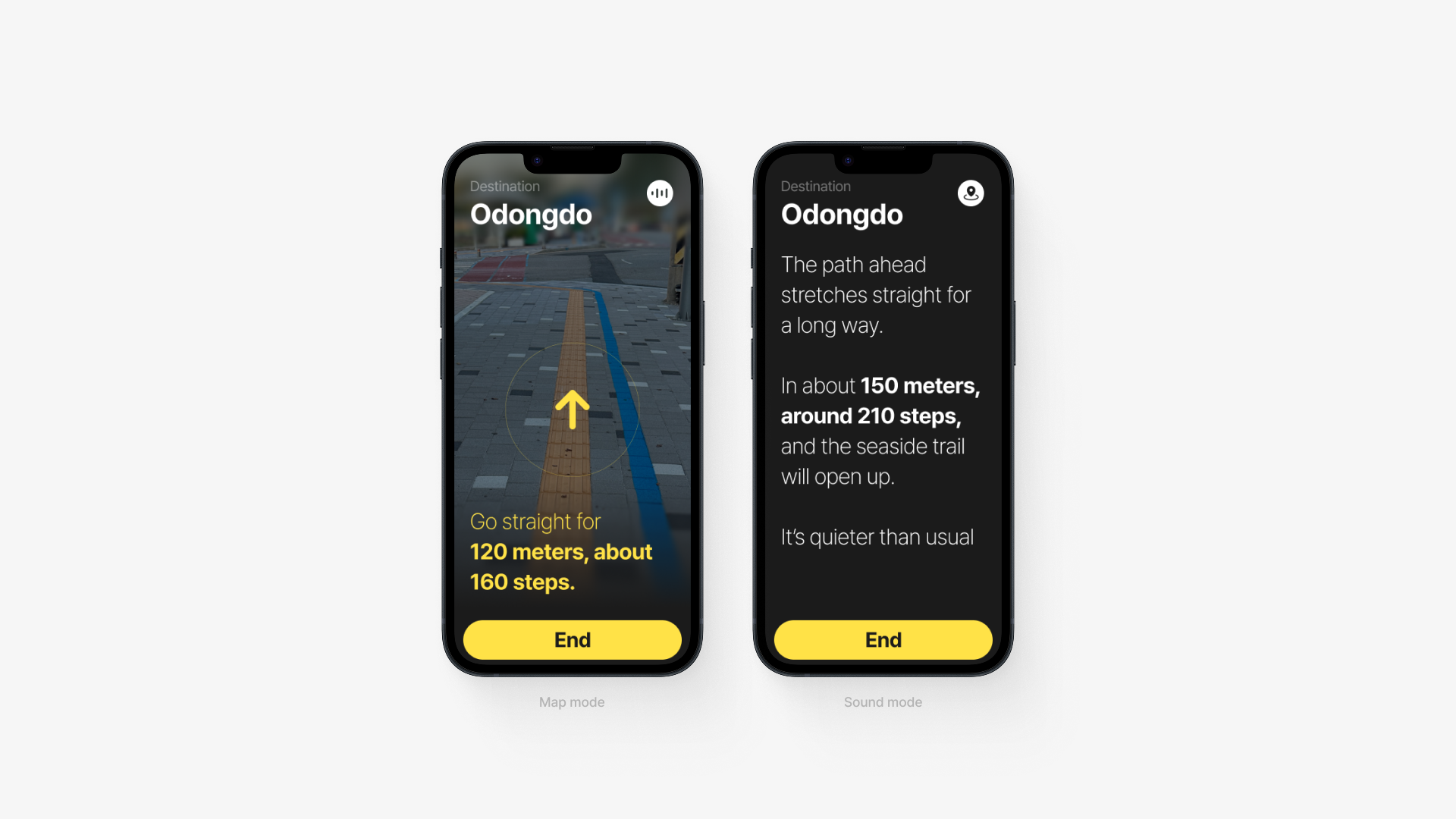

Silent Way offers two navigation modes so users can choose how they want to experience the journey.

Map Mode provides AR-based visual guidance, while Sound Mode delivers real time voice descriptions of the path and surroundings. Together, they ensure safe, intuitive, and accessible travel for everyone.

Map mode

Map mode functions as the user’s eyes by providing camera based AR navigation. The camera recognizes tactile paving, crosswalks, obstacles and surrounding environments in real time and delivers this information directly to the user for safe and intuitive guidance.

Left&Right for No

Safety Features

Safety features are designed to help users quickly recognize and respond to emergencies or potential hazards during travel.

Emergency mode and vibration alerts draw immediate attention, enabling faster response in urgent situations.

In addition, color-coded cues further enhance safe and reliable navigation.

Emergency mode

Sound mode

Sound mode allows users to navigate without looking at the screen. Through earphones, it provides real time voice guidance that not only delivers distance and information but also conveys the surrounding environment and atmosphere, offering a more immersive travel experience.

Safety alerts

Provides vibration feedback near crosswalks or hazards, and uses color-coded cues for straight, left, and right directions to enhance orientation.

Up&Down for Yes

Quickly activated by double-tapping the power button, instantly sharing the user’s location with designated contacts and emergency services.

Gesture Interaction

Users can communicate with the app through simple head gestures, without the need to look at or touch the screen.

Context

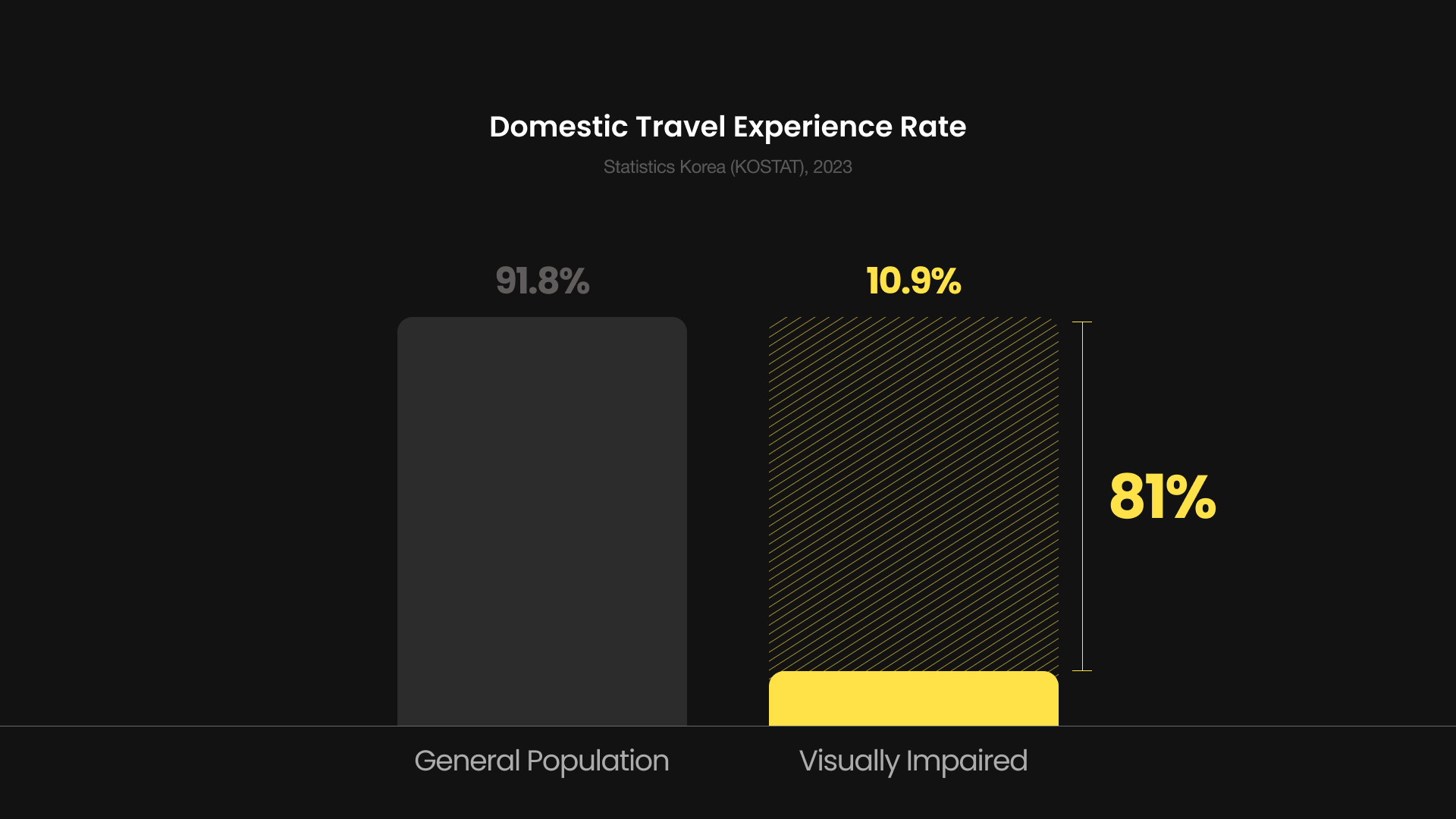

In South Korea, the domestic travel participation rate of visually impaired individuals was only 10.9% in 2023. Compared to the national average of 91.8% in the same year, this is about 81% lower and shows a serious lack of access to leisure and cultural activities for people with visual impairments.

This gap is not only due to a lack of physical infrastructure but also to limited access to travel information and reliance on companions which reduce autonomy. These conditions often lead to feelings of isolation and anxiety. Currently available audio tour services are mostly static and prerecorded so they do not reflect real time changes in the environment or provide immersive sensory experiences.

As a result many of these services are guide dependent, making it difficult for visually impaired individuals to enjoy truly self directed travel experiences.

Research

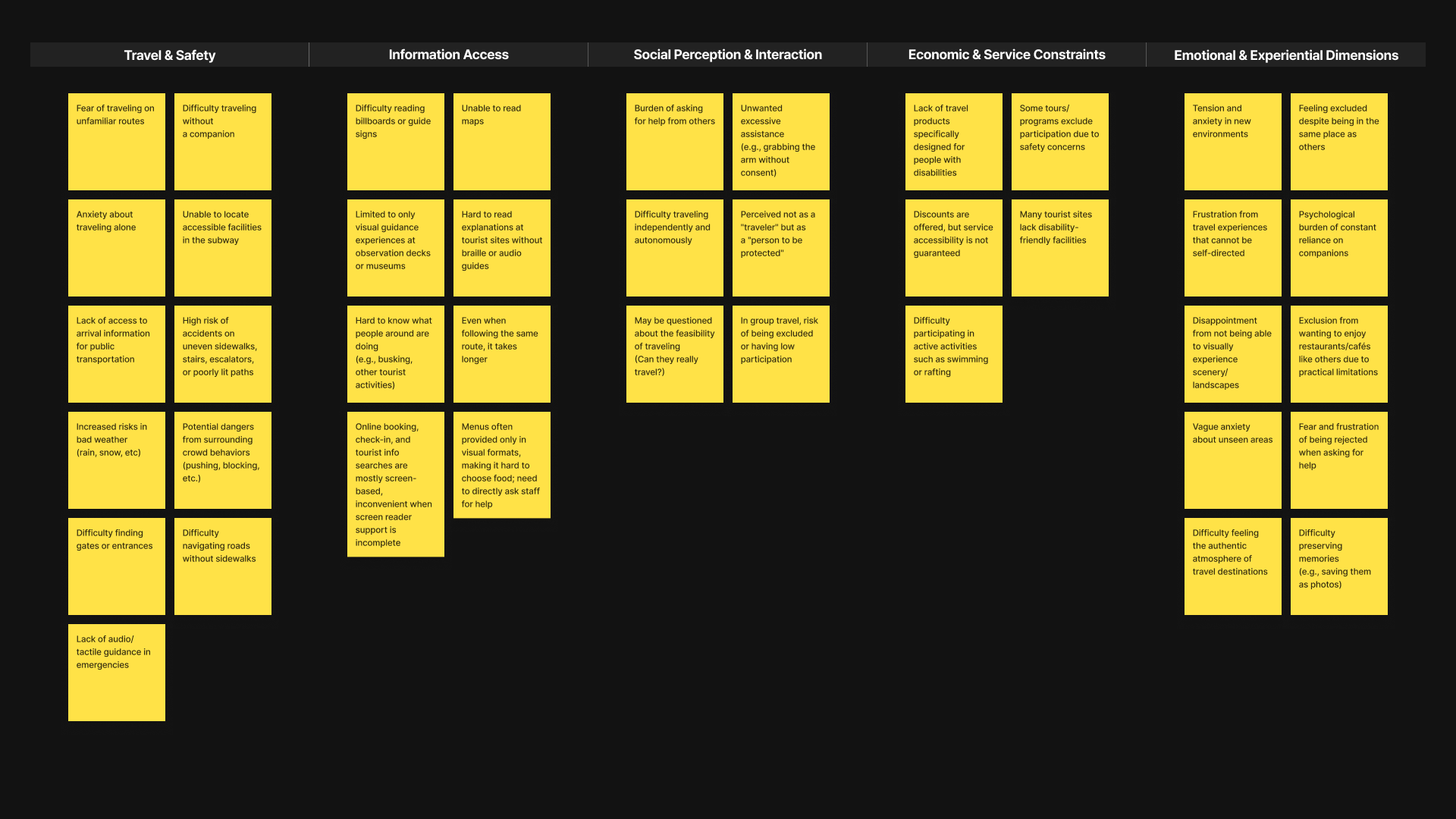

To design meaningful travel experiences for visually impaired individuals, it was essential to first gain a deep understanding of the challenges they face.

Rather than stopping at the notion of safe navigation, I began with desk research to analyze relevant statistics and existing services, identifying what current solutions often overlook.

Based on these insights, I mapped the user’s journey step by step, organizing the difficulties and emotions encountered at each stage into a pain point board.

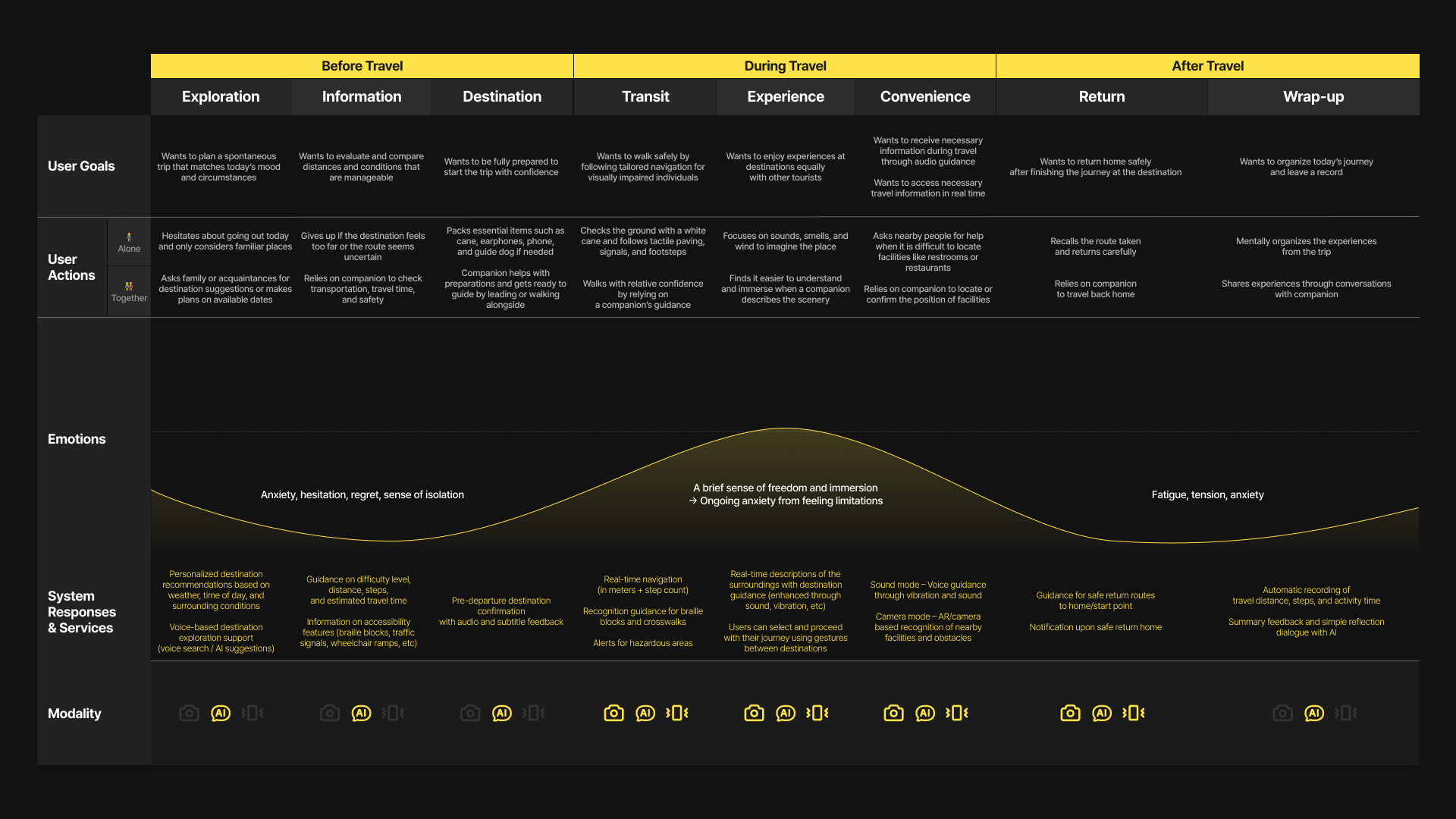

Finally, I created a journey map to capture the entire experience from preparation to post-travel, which helped uncover the key opportunities where technology could provide meaningful support.

Solution

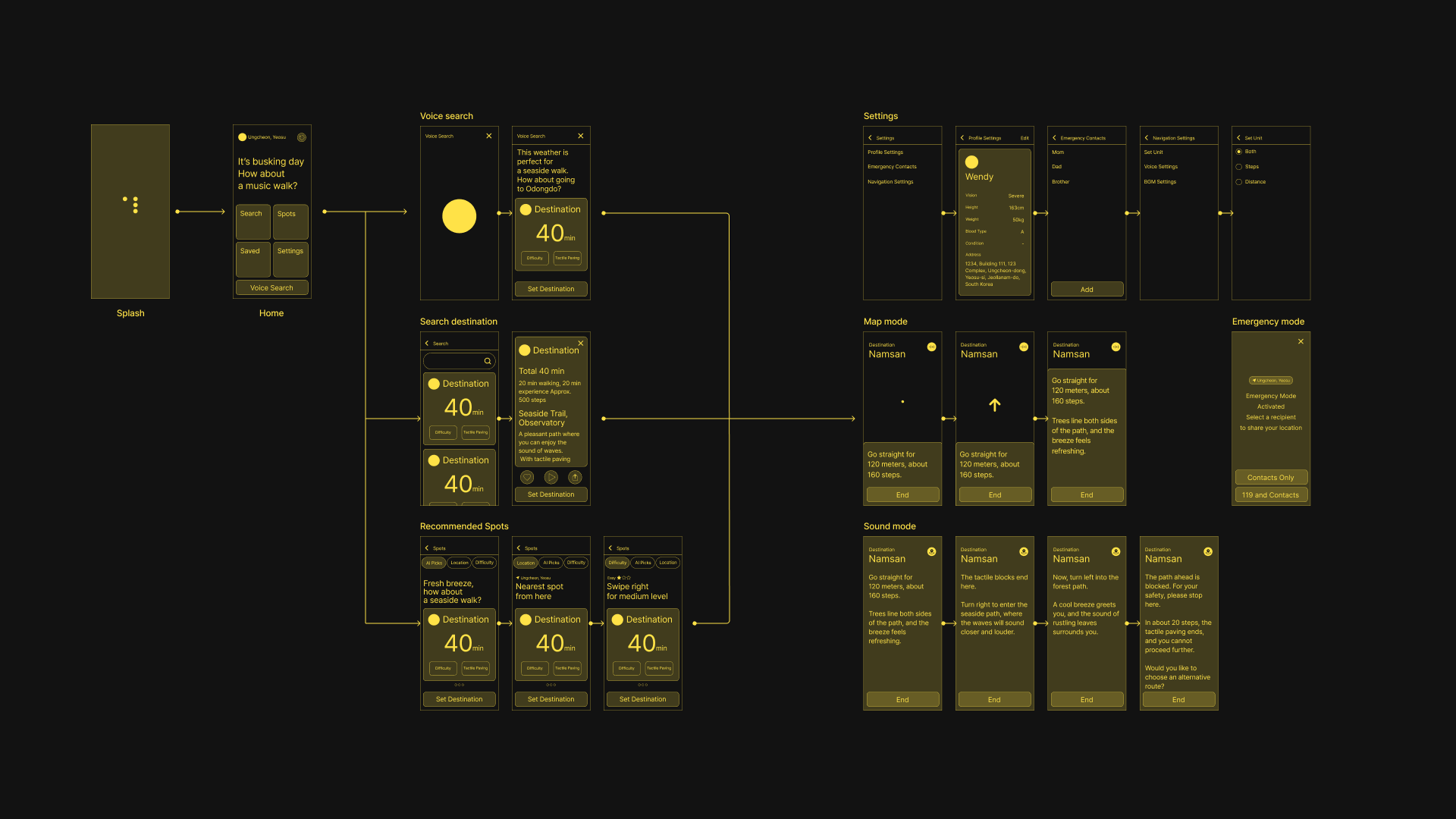

Based on these insights, I identified destination search, real-time route guidance, and emergency response as the most essential functions for visually impaired travelers.

Accordingly, I created a wireframe where these core features are located in intuitive locations. The interface was designed around simple inputs such as voice, vibration, and gesture, ensuring that it remains accessible and easy to use across various contexts.

Visual Design & Accessibility

The GUI was designed with a focus on users with low vision, employing high contrast colors and large typography to maximize readability. A consistent layout structure was also applied across screens to reduce cognitive load and ensure smooth navigation.

Home

The home screen was designed to help users quickly orient themselves and make meaningful choices as soon as they enter the app.

AI recommends destinations based on the weather and the user’s current location, allowing them to either select a suggested option or set their own through additional search.

The primary voice search feature was placed at the bottom of the screen for quick, one-step access. In addition, large buttons were applied so that users with low vision can easily distinguish key functions and operate them comfortably.

Personalized Recommendations

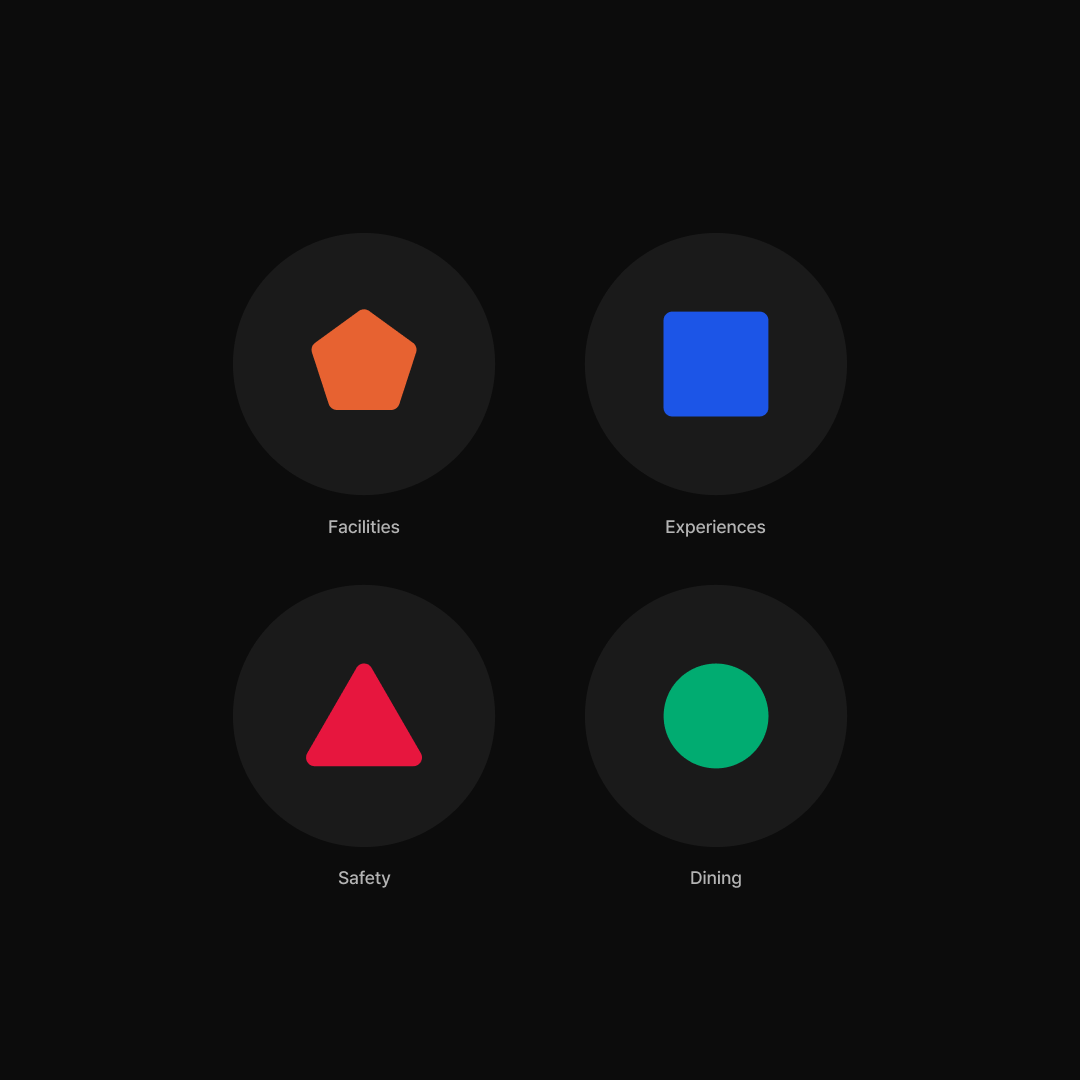

AI considers not only the user’s current location and weather, but also factors such as mobility conditions, difficulty level, distance, the presence of tactile paving, and estimated travel time to suggest the most suitable destination.

Users can explore destinations through AI recommendations, by location, or by difficulty level, and they can further refine their choices using categories such as facilities, attractions, safety, or dining.

Category icons combined with voice guidance help users clearly identify and quickly access the information they need.

Navigation Mode

Navigation mode is a core feature designed to help users travel safely and efficiently to their destination.

By turning the camera and location data into the user’s eyes, the system perceives and explains the surroundings, enabling people with visual impairments to move independently with confidence.

Users can choose between visual or audio-based guidance depending on their situation, ensuring accessibility across diverse contexts.

Map Mode

With a camera-based AR interface, Map Mode displays tactile paving, crosswalks, and obstacles on the screen.

Arrows and guiding text provide clear instructions about the route and nearby elements, making navigation intuitive for users who prefer visual feedback. A single button allows users to switch to Sound Mode when needed.

Sound Mode

Sound Mode delivers real-time voice guidance and ambient descriptions through earphones, enabling safe navigation without relying on the screen.

It supports not only people with visual impairments but also anyone in conditions where viewing the screen is difficult, such as when carrying luggage or moving through crowded areas.

Risk Response

The system instantly detects potential dangers during travel and alerts the user.

In situations that threaten safety, such as crosswalks, interrupted tactile paving, obstacles, or approaching vehicles, it provides a stop warning and suggests alternative routes.

In case of an emergency, the system activates an emergency mode that automatically sends the user’s location to 119 or pre-registered emergency contacts, along with user information, to support a swift response.

Reflection

Through this project, I learned that designing for people with visual impairments goes beyond applying accessibility guidelines.

It requires a deep understanding of real mobility contexts and emotional needs. Although limited user testing and technical constraints left some aspects unverified, the project allowed me to trace the emotions and discomforts of visually impaired users through scenario-based exploration and reflect on how a designer could contribute meaningfully.

It also revealed the potential of integrating multimodal interfaces and AI technologies to further support safer and more autonomous mobility.