How might we enable people to feel the emotions they want, when they need them?

This project is a concept design that detects users’ emotional rhythms through biometric data and visualizes their state in real time. Using heart rate variability and movement data as reference signals, the project adopts an emotional state tracking system and is implemented as an interactive prototype built with Vibe Coding.

GLASS SOUL

Deliverables

UX Flow

GUI

Visual Design & UI Kit Prototypes

Design Guidelines

Vibe Coding

Role

UX Designer

Team

Solo project

TimeLine

Oct - Nov 2025

Context

Recognizing Emotions, Beyond the Numbers

Modern people can easily measure various forms of biometric data such as sleep, steps, and heart rate, yet still struggle to recognize or regulate their emotions.

Even when stress or fatigue builds up, technology fails to interpret these signals as emotional, treating them only as physical indicators.

As a result, we often realize our emotions only after they have reached their limit. Glass Soul began from this change in perspective.

What does it take to truly feel the emotions I desire? And if I make an effort to become those emotions, can I actually reach them?

Research

Instead of surveying emotions directly, I explored how bodily rhythms and everyday behaviors could reveal emotional states.

I conducted research on how bio signals such as heart rate variability and movement patterns can be interpreted as emotional cues, focusing on possible mapping methods.

I also examined biometric information available from commercial wearables to identify the physiological metrics most relevant to Glass Soul’s emotional mapping model.

Design

The interface was designed with a goal-first structure, ensuring that users begin by setting the emotional tone they want to maintain. This decision was based on the insight that emotion regulation becomes clearer when users anchor themselves to a single intention.

Real-time sensing, contextual suggestions, and recovery actions were arranged as supportive layers that help users move through recognizing their state, regulating it, and reflecting on the change.

Wireframe

Based on the core concept of the Emotion Flow System, I defined emotion goal setting, real-time detection, feedback, and emotional history as the key elements that help users reach their desired emotional state.

Accordingly, I designed a wireframe that organizes these features into a simple and intuitive flow, where goal setting, behavioral suggestions, and feedback connect seamlessly to form a continuous emotional loop.

The overall layout emphasizes clarity and balance, allowing users to recognize, regulate, and reflect on their emotions through a calm and cohesive interface experience.

Home

The core structure of the app is designed around the journey of helping users reach their desired emotional state. At the bottom of the home screen, an Emotion Goal button allows users to set their target emotion at any time and define the emotional direction for the day.

The system analyzes biometric data such as heart rate variability and activity levels to provide contextual suggestions including breathing, music, or recovery routines suited to the user’s current condition. Once an emotion goal is set, a Glass Bead symbolizing that emotion appears on the home screen, and the app continues to provide supportive actions that help users stay aligned with their goal in a gentle, non-intrusive way.

If emotions such as anxiety, stress, tension, or gloom are detected, the system suggests immediate recovery actions such as breathing exercises, stretching, or short breaks.

When a suggestion does not feel right, users can dismiss it and receive alternative actions designed to help improve their HRV and restore balance.

Emotional Recovery Flow

This flow visualizes how the system detects emotional downturns and supports the user’s recovery in an immediate and context-aware way. Based on HRV data, the system recognizes four emotional states: Fatigue, Stress, Gloomy, and Calm.

When users experience Fatigue, Stress, or Gloomy states, the system provides personalized recovery suggestions such as breathing exercises, music, or actions derived from previous user data. When in a Calm state, it encourages emotion-expanding behaviors such as taking a photo or writing a short emotion note to help sustain and reflect on positive emotions.

Each state is paired with tailored guidance, allowing users to actively recognize and regulate their emotional rhythm throughout the day.

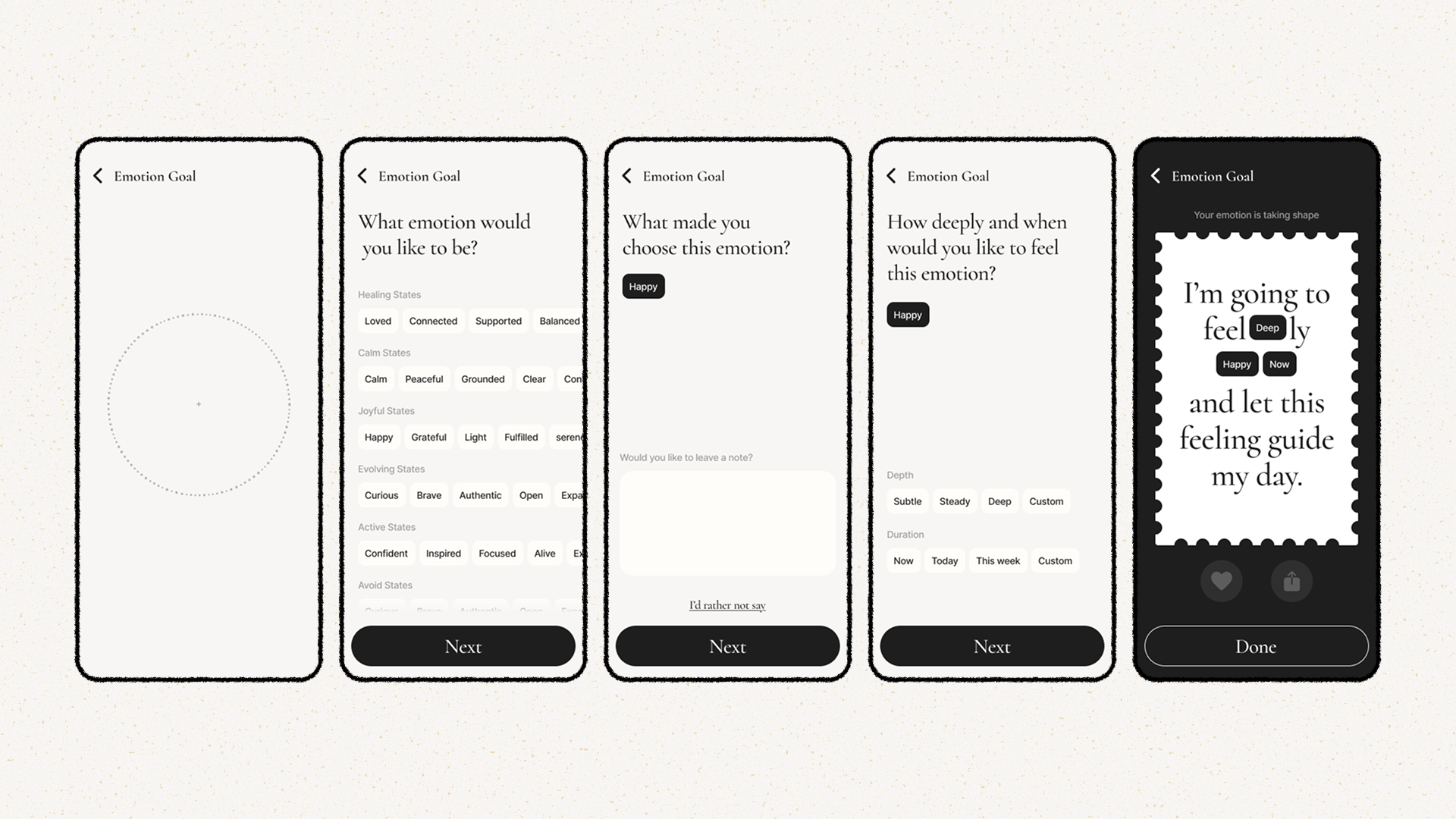

Emotional Goal Setting

This flow guides users to define the emotion they want to feel and reflect on the reasons behind it.

Users explore why they wish to experience a certain emotion and decide how deeply and for how long they want to sustain it.

In the final step, these choices are transformed into a personalized affirmation that helps users make an emotional commitment to becoming that feeling.

Through this process, the emotional goal becomes more than a simple selection, evolving into an experience shaped by intentional awareness and self-reflection.

Calendar

The calendar module visualizes emotional changes on a daily, weekly, and monthly basis, helping users easily recognize their emotional patterns.

Through the summary view, users can intuitively reflect on questions such as “Which emotions did I feel most often this month?” and “Am I currently in a stable state?”

This structure allows Glass Soul to represent emotions not as raw data but as a rhythm of daily feelings and recovery experiences, helping users develop the ability to consciously perceive and regulate their emotions.

Coding

The prototype was developed using Claude to visualize how users’ emotional goals could be transformed into interactive responses. When a user set an emotional goal, the interface dynamically reflected it through real-time changes in tone, motion, and color. The purpose of this implementation was to go beyond visual design and demonstrate how an interface could recognize and react to emotional data. Through this process, an abstract concept was translated into a fully functioning emotion-based interaction system, bridging the gap between feeling and technology.

Reflection

Through this project, I was able to experiment with how emotions could be communicated through interaction by translating emotional design concepts into actual code. This allowed me to explore expressive elements such as color, motion and speed in a tangible way.

However, this expressive layer revealed a deeper technical limitation. While the interface could visually represent emotional shifts, it could not reflect the user’s real contextual or situational emotion because the system had no way to detect or interpret emotional data in real time. This gap between expression and detection was a constraint I could not overcome within the scope of an individual project.

Yet, this limitation clarified the next direction. By connecting real-time biometric or behavioral data, emotional changes could be measured quantitatively and dynamically reflected in the interface’s responses, evolving the system from a conceptual prototype into a practical emotion-responsive interaction model.